Abstract

Digital image processing techniques and algorithms have become a great tool to support medical experts in identifying, studying, diagnosing certain diseases. Image segmentation methods are of the most widely used techniques in this area simplifying image representation and analysis. During the last few decades, many approaches have been proposed for image segmentation, among which multilevel thresholding methods have shown better results than most other methods. Traditional statistical approaches such as the Otsu and the Kapur methods are the standard benchmark algorithms for automatic image thresholding. Such algorithms provide optimal results, yet they suffer from high computational costs when multilevel thresholding is required, which is considered as an optimization matter. In this work, the Harris hawks optimization technique is combined with Otsu’s method to effectively reduce the required computational cost while maintaining optimal outcomes. The proposed approach is tested on a publicly available imaging datasets, including chest images with clinical and genomic correlates, and represents a rural COVID-19-positive (COVID-19-AR) population. According to various performance measures, the proposed approach can achieve a substantial decrease in the computational cost and the time to converge while maintaining a level of quality highly competitive with the Otsu method for the same threshold values.

Similar content being viewed by others

1 Introduction

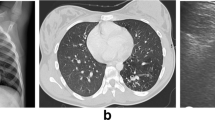

Among the wide variety of sciences that can offer support during global pandemics, digital image processing is the one that can assist many aspects of medicine. Medical imaging systems are used to identify or study the occurrence/development of certain diseases. The domain of medical imaging is developing rapidly due to advances in image processing techniques (e.g., applied machine learning) that include image recognition, segmentation, enhancement, and analysis. Among various modalities, computed tomography (CT) can be used effectively to screen and monitor diagnosed cases and has a direct impact on health outcomes because it assists healthcare professionals to administer rapid, accurate, and large-scale diagnostic screenings [18]. CT scans of the chest may be helpful in diagnosing COVID-19 in patients with a high clinical suspicion of infection but who have not been recommended for routine screening. In this type of medical imaging, cross-sectional tomographic images (slices) of the body are produced by using multiple X-ray measurements. Chest CT scans of patients with COVID-19-associated pneumonia usually show ground-glass opacification, possibly with consolidation.

Among various available medical image processing techniques and algorithms, image segmentation methods are the best tools to simplify image representation and analysis. Image segmentation is considered an essential part of the pattern recognition, computer vision, and medical image processing techniques [13, 30]. In image segmentation, the image is divided into regions of interest, and the segmentation process can be conducted by using shape, size, color, texture, illumination, etc. [16]. Image segmentation approaches can be categorized into four main types: clustering, region-based, texture analysis, and histogram thresholding. Among these various segmentation approaches, histogram thresholding is known for its robustness, simplicity, and accuracy and therefore is widely applied in image segmentation. In histogram thresholding, the histogram data are extracted from a given image and the best threshold values are used to categorize pixels in different regions [12]. For automatic image thresholding, traditional statistical approaches such as the Otsu and Kapur methods are the typical benchmark algorithms.

Multilevel thresholding methods deliver better results when compared to other traditional thresholding methods [14, 17]. A wide range of multilevel segmentation methods have been proposed in the literature [40, 50]. Furthermore, obtaining optimal threshold values is considered a practical optimization problem. Therefore, many nature-inspired algorithms have been presented in recent years for solving difficult practical optimization issues. Because of their adaptability and efficiency, those algorithms have attracted the interest of both scientists and researchers.

Despite their simple design, nature-inspired algorithms are effective at addressing extremely difficult optimization problems. Those algorithms are an essential component of modern global optimization algorithms, artificial intelligence, and informatics in general. Many metaheuristic algorithms have the property of reaching a global optimum for the problem after a relatively small number of iterations. Therefore, various evolutionary and swarm-based strategies have been combined with statistical thresholding methods such as the Kapur method and the Otsu method in order to find the optimal threshold values for multilevel segmentation [52]. The Harris hawks optimization (HHO) algorithm is a well-known swarm-based, gradient-free optimization technique. HHO has received much interest from scientists and researchers in terms of its performance, quality of results, and its acceptable convergence in dealing with different applications in real-world problems.

In this work, the Harris hawks optimization technique is combined with Otsu's method to effectively reduce the required computational cost while maintaining optimal results. The proposed method is validated using publically accessible imaging datasets, which include chest scans with clinical and genetic correlates and reflect a COVID-19-positive (COVID-19-AR) population in rural areas. For the same threshold values, the suggested strategy can achieve a significant reduction in computing cost and time to converge while retaining a level of quality that is highly competitive with the Otsu method, according to different performance criteria.

The content of the paper can be summarized as follows: Sect. 2 presents a literature review of CT-based methods for efficient lung segmentation. Section 3 describes the implementation of Harris hawks optimization algorithm for multilevel image segmentation. Section 4 outlines the Harris hawks optimization for multi-threshold image segmentation evaluation with some confirmatory ranking results and analysis. Section 5 presents the evaluation of results. Finally, Sect. 6 presents the conclusions of this paper.

2 Literature review

In the field of CT imaging, the accurate segmentation of lung can lead to the accurate diagnosis of lung infection and to the correct detection and classification of lung nodules. For this reason, various CT-based methods for efficient lung segmentation have been proposed in the literature. For example, region-growing techniques have been applied in works such as Netto et al. [31], while other works such as Keshani et al. [26] have applied active contours techniques. On the other hand, authors in Messay et al. [32], Pu et al. [43], and Tan et al. [49] have utilized thresholding strategies such as rule-based, fuzzy inference methods, and intensity in order to detect nodules in CT images. Template matching methods have also been widely used in some works such as Akram et al. [3], Jo et al. [24], and Serhat Ozekes and Ucan [47]. Meanwhile, other works such as da Silva Sousa et al. [8] and Narayanan et al. [37] have designed composite discriminative feature techniques to classify and detect lung nodules through the use of different classification methods. In addition, some other machine learning methods have been investigated for lung nodule detection, such as those presented in Furqan et al. [19], Gruetzemacher et al. [21], Jiang et al. [23], Pehrson et al. [42], and Zhang et al. [53].

Recently, contextual image information (i.e., channel and spatial) is shown to be effective for semantic segmentation as proposed by Li et al. [28], which interactively explores the spatial contextual information and the channel contextual information. In their work, the spatial contextual module is exploited to uncover the spatial contextual dependency between pixels by exploring the correlation between pixels and categories, while the semantic features are extracted using the channel contextual module by modeling the long-term semantic dependence between channels. Results show that the two context modules are adaptively integrated to achieve better outcomes, by connecting them in tandem for interactive training for mutual communication between the two dimensions.

Image thresholding techniques can be categorized as either bi-level or multilevel. In bi-level techniques, designated objects are differentiated by using a single threshold value. In multilevel techniques, the image is divided into multiple regions using multiple threshold values [17]. Therefore, the multilevel thresholding techniques give more accurate segmentation results as compared to the bi-level ones [27]. Moreover, the optimal threshold values in multilevel thresholding techniques can be classified into parametric and nonparametric approaches [38].

Traditional image segmentation approaches such as the Otsu [39] and the Kapur et al. [25] methods use the maximization of the variance between classes and the histogram entropy to obtain the best possible threshold values. Although those methods obtain optimal results, they impose high computational cost that increases as the number of thresholds is increased. More recently, the use of metaheuristic algorithms in combination with traditional statistical approaches has been proved to work effectively and to reduce the computational cost when multilevel thresholding is required.

Well-known automatic thresholding algorithms, such as the algorithms in the Otsu and the Kapur methods, function by performing a set of steps. These steps include processing the input image, obtaining the image histogram, computing the threshold value, and finally converting the image pixels into white in regions where the saturation is greater than the obtained threshold and into black otherwise. However, these algorithms utilize different methods to compute the threshold value. For instance, the algorithm in Otsu’s method processes the image histogram and segments the objects by minimizing the variance on each of the classes. The obtained histogram contains two expressed peaks that represent different ranges of intensity value.

In Otsu’s method, the image histogram is separated into two clusters by a threshold that is defined by minimizing the weighted variance of the classes denoted by \(\sigma_{{\text{w}}}^{2} \left( t \right)\), where \(\sigma_{{\text{w}}}^{2} \left( t \right) = w_{1} \left( t \right)\sigma_{1}^{2} \left( t \right) + w_{2} \left( t \right)\sigma_{2}^{2} \left( t \right)\), which is defined as the Otsu model, where \(w_{1} \left( t \right){ },{ }w_{2} \left( t \right)\) are the probabilities of the two classes divided by a threshold \(\left( t \right)\), where \(\left( {0 \le t \le 255} \right)\). Otsu’s method defines two alternatives to find the threshold. The first alternative is to minimize the within-class variance \(\sigma_{{\text{w}}}^{2} \left( t \right)\), and the second is to maximize the between-class variance using \(\sigma_{{\text{b}}}^{2} \left( t \right) = w_{1} \left( t \right)w_{2} \left( t \right)\left[ {{ }\mu_{1} \left( t \right) - \mu_{2} \left( t \right)} \right]^{2}\), where \(\mu_{1}\) is a mean of class \(i\). The cluster probability function is used to calculate the probability \(P\) for each pixel value in the two separated clusters \(C_{1} ,{ }C_{{2{ }}}\) as \(w_{1} \left( t \right) = \sum\nolimits_{i = 1}^{t} P \left( i \right)\) and \(w_{2} \left( t \right) = \sum\nolimits_{i = t + 1}^{I} P \left( i \right),{ }\) respectively [48].

Digital images can be represented as an intensity function \(f\left( {x,y} \right)\) that includes gray-level values, with a total number of pixels \(n\) and the total number of pixels with a specified gray-level \(i\). Therefore, the probability of the occurrence of gray-level \(i\) is \(P\left( i \right) = n_{i} /n\). The pixel intensity values for \(C_{{1{ }}}\) and \(C_{2}\) are within the range of \(\left[ {1,t} \right]\) and \(\left[ {t + 1,I} \right]\), respectively, where \(I\) is the maximum intensity value (i.e., 255). The means for \(C_{1} ,{ }C_{2}\), denoted by \(\mu_{1} \left( t \right),{ }\mu_{2} \left( t \right)\), are obtained by \(\mu_{1} \left( t \right) = \sum\nolimits_{i = 1}^{t} i P\left( i \right)/w_{1} \left( t \right)\) and \(\mu_{2} \left( t \right) = \sum\nolimits_{i = t + 1}^{I} i P\left( i \right)/w_{2} \left( t \right)\), respectively. Therefore the \(\left( {\sigma_{1}^{2} ,{ }\sigma_{2}^{2} } \right)\) values can be obtained by \(\sigma_{1}^{2} \left( t \right) = \sum\nolimits_{i = 1}^{t} {\left[ {i - \mu_{1} \left( t \right)} \right]^{2} } P\left( i \right)/w_{1} \left( t \right)\), and \(\sigma_{2}^{2} \left( t \right) = \sum\nolimits_{i = t + 1}^{I} {\left[ {i - \mu_{2} \left( t \right)} \right]^{2} } P\left( i \right)/w_{2} \left( t \right),\) respectively [48].

Obtaining optimal threshold values is considered a practical optimization problem. Therefore, many nature-inspired algorithms have been presented in recent years for solving difficult practical optimization problems [5,6,7, 33,34,35, 44,45,46]. Some of the metaheuristic algorithms are designed for image processing applications and image thresholding specifically include [2], which proposed the use of bird-mating optimization together with the Otsu and the Kapur methods as a strategy to find the best thresholds for image segmentation . On the other hand, Mousavirad and Ebrahimpour-Komleh [36] used the human mental search algorithm with Otsu’s and Kapur’s methods. Another nature-inspired approach was put forward in Pare et al. [41], in which Rényi entropy is used for locating the best thresholds using the bat algorithm. Other works have proposed using different algorithms for image segmentation, such as Liang et al. [29], which used Tsallis entropy and the grasshopper optimization algorithm, Baby Resma and Nair [4] which used the krill herd optimization algorithm and [54] which combined the firefly optimization algorithm to reduce the computation time and enhance the accuracy of image segmentation . A comprehensive review of available metaheuristic algorithms can be found in Pare et al. [41].

As presented in this section, there are several approaches that have been applied in the recent years for medical image segmentation. The first group of those approaches employed the concept of region-growing and active contours techniques to segment images and isolate the region-of-interest areas. Although this group of techniques can lead to a good level of accuracy, those approaches need to specific knowledge in the domain, and at the mean time they usually need to interact with human medical experts. This adds a pressure on human experts and also delays the process of treatment.

There are other different approaches such as template matching methods and composite discriminative feature that have been also utilized by a few researchers. The main drawback of those approaches is they cannot be generalized to be used in different imaging modalities and they do not lead to convincing outcomes in some cases.

On the other hand, many researchers utilized thresholding techniques to detect nodules in CT images as a base for the image segmentation. In these techniques, the maximization of the variance between classes is usually employed to segment images. Otsu method is one of the most popular techniques which is used in this domain. Although utilizing Otsu method in multilevel segmentation leads to competitive results in the medical image segmentation, the high computational cost needed by this approach is a challenging task that researchers are still trying to overcome; hence, there is the importance of employing optimization. The authors of this research employed the HHO with Otsu criterion as an objective function to overcome the high computational cost and in the meantime to achieve optimal outcomes.

The Harris hawks optimization (HHO) algorithm proposed in Heidari et al. [22] is a new metaheuristic algorithm that has the potential to be used for multilevel image thresholding. This algorithm is swarm based and has been developed to efficiently handle continuous optimization tasks and produce high-quality solutions. The HHO algorithm is still relatively new and has not been tested sufficiently on real-world problems. In this research, therefore, it is applied to the multilevel image segmentation of chest images of COVID-19 patients. Its segmentation results are then analyzed and compared against those obtained by the Otsu method. The authors in this research claim that the use of metaheuristic algorithms in image segmentation domain lowers the amount of computations required to locate the best threshold configuration.

3 Implementation of Harris hawks optimization algorithm for multilevel image segmentation

Harris hawk’s optimization is a population-based swarm intelligence algorithm. It mimics the hunting strategy of Harris hawks, which is mathematically modeled to address different optimization problems. A detailed explanation of the background and fundamentals of the algorithm can be found in Heidari et al. [22]. Similar to other meta-heuristics, HHO consists of two main phases: diversification (exploration) and intensification (exploitation) which mimics the attacking strategy of Harris hawks when they are hunting their prey, where the attacking strategy is changed based on the circumstances of the prey. The attacking strategy that is simulated in HHO is explained in the following subsections. Figure 1 illustrates the phases of the HHO algorithm.

Phases of Harris hawks optimization [22]

3.1 Diversification phase (exploration)

In HHO, the Harris hawks represent the solutions; the best solution in each iteration represents the prey. Harris hawks perch randomly in some places, and they are one of the two strategies to attack their prey. The perch positions of the Harris hawks are based on the positions of other family members, as modeled in Eq. (1) for the condition q < 0.5, or they perch randomly in tall trees, which is modeled by Eq. (1) for the condition q ≥ 0.5. The first rule in the model (see Eq. 1) represents the random generation of solutions, and the second rule represents the difference between the position of the best solution (prey) and the average location of the group (hawks):

where \(X\left( t \right)\) is the position vector of a hawk in the tth iteration, \(X_{{{\text{rand}}}} \left( t \right)\) is a randomly selected hawk from the current population, \(X_{{{\text{rabbit}}}} \left( t \right)\) is the position of the rabbit prey, \(X\left( t \right)\) is the current position vector of the hawks, \(r_{1}\), \(r_{2}\), \(r_{3}\), \(r_{4}\), and \(q\) are random numbers that are updated in each iteration, \({\text{UB}}\) and \({\text{LB}}\) are the upper bound and lower bound of the variables, respectively, and \(X_{m}\) is the average position of the current population of hawks. The average position \(X_{m}\) can be defined as shown in Eq. (2):

3.2 Switch between diversification (exploration) and intensification (exploitation)

The HHO algorithm can switch between exploration and exploitation according to the escaping energy E of the prey. The mathematical model for the energy of the prey can be defined as shown in Eq. (3):

In HHO, \(E_{0}\) randomly changes inside the interval (− 1, 1) at each iteration. This requires E to be decreased linearly proportional to the number of iterations.

3.3 Intensification phase (exploitation)

Harris hawks execute a surprise dive to pounce on their prey. However, the prey has the power or capability to escape from this risky situation. The prey’s chance of escaping attack can be represented by r as follows:

3.3.1 Soft (smooth) besiege strategy

If the prey has some energy, it tries to escape from the hawks by doing random jumps. However, the Harris hawks surround the prey softly to exhaust it and then execute a surprise attack. This process can happen when the chance of the prey escaping, r, equals r ≥ 0.5, (i.e., unsuccessful) and the escaping energy of the prey, E, equals E ≥ 0.5. This process can be modeled by Eqs. (4) and (5) as follows:

where \(\Delta X\left( t \right)\) is the difference between the current location and the vector of the rabbit in iteration t and \(r_{5}\) is a random number between 0 and 1, which represents the random bounce force of the rabbit throughout the escaping criteria.

3.3.2 Hard besiege strategy

If the prey has a little escaping energy (|E|< 0.5) and it becomes exhausted (unsuccessfully escaping, r ≥ 0.5), the Harris hawks surround the prey and perform a surprise attack. This situation can be modeled by Eq. (6) as follows:

3.3.3 Soft (smooth) besiege strategy and progressive quick pounce

If the prey has some energy to escape (which implies that |E|≥ 0.5), it can successfully escape (r < 0.5). In this case, the Harris hawks use a smooth (soft) besiege to attack the prey. During the escaping process, a simulation of the zigzag motion of the prey can be performed using a Lévy flight (LF) operator. Based on the tricky movements of the prey, the Harris hawks try to modify their pouncing strategy gradually.

The Harris hawks can perform the soft besiege by deciding their next position as shown in Eq. (7):

The Harris hawks try to adjust their movement by comparing the current pounce result and the previous one. If the result is not good, they will pounce based on the LF as shown in Eq. (8):

where D is the dimension of problem, S is a random vector of size 1 × D, and LF is the Lévy flight function. Based on the previous assumption of the soft besiege, the Harris hawks update their position by Eq. (9) as follows:

3.3.4 Hard besiege strategy and progressive quick pounce

The Harris hawks apply the hard besiege strategy when the prey has a little energy to escape (|E|< 0.5) and it also has a chance to successfully escape (r < 0.5). To perform this strategy, the Harris hawks try to reduce the distance between their average position \(X_{m}\) and that of the prey. The overall process can be modeled by Eq. (10) as follows:

where \(Y\) and \(Z\) are obtained using the new rules in Eqs. (11) and (12), respectively:

3.3.5 Pseudocode of Harris hawks optimization algorithm for multilevel thresholding

The pseudocode of the procedures of the HHO algorithm employed for multilevel thresholding in this research is described in Algorithm 1.

There are three primary phases in the HHO algorithm. The following are the processes and their computational complexity (i.e., time complexity) [20].

-

1.

Initiation process \(\to \,O\left( {\text{N}} \right)\).

-

2.

Updating the locations of all Harris hawks\(\to \,O\left( {T \times N \times D} \right)\).

-

3.

Determining the best location \(\to \,O\left( {T \times N} \right)\).

where T is the maximum iteration, N: population size, D: the dimension of the problem.

Therefore, the total complexity of the HHO algorithm can be described as \(O(N \times \left( {T + TD + 1} \right)\).

4 Experiment and results

The World Health Organization declared the outbreak of the novel coronavirus (2019-nCoV) an ongoing global pandemic in March 2020. As of today, tens of millions of cases have been confirmed, and with millions of lives lost due to COVID-19, it is becoming one of the deadliest pandemics in history.

This pandemic has shown that public health is not only a medical problem; it has also become the main common concern of all scientific fields. Therefore, interdisciplinary teams of scientists from all over the world have conducted novel research and studies to find efficient solutions that can be used to control the consequences of the pandemic and to prevent its return and the emergence of similar pandemics.

4.1 Dataset

In order to evaluate the efficiency of the proposed approach, a publicly available imaging dataset was obtained from the Cancer Imaging Archive. The obtained dataset consists of a set of CT images (762 × 762 pixels with a spacing 1.08 × 1.08 mm and 0.98 × 0.98 mm and a slice thickness 3.14 mm) [9,10,11]. The dataset contains chest imaging with clinical and genomic correlates and represents a rural COVID-19-positive population (COVID-19-AR). The dataset contains a total of about 105 patients. For the purpose of this research, 20 randomly selected patient records (hereinafter referred to as 10 individual datasets) were used to test and evaluate the proposed approach.

4.2 Experimental settings

Prior to the experiment, the initial parameter values were set and tuned experimentally (see Table 1). In the experiment, the proposed algorithm starts by generating 30 different possible solutions, where each solution represents the set of possible thresholds. The number of iterations is set to 150 iterations to allow the analysis of the convergence behavior of the algorithm. In order to evaluate the stability and the reliability of the generated outcomes, the experiment is repeated 25 times. The lower bound (LB) and the upper bound (UB) are set to 0 and 255, respectively (for grayscale images), and the value of the dimension parameter in the algorithm is set dynamically in accordance with the number of thresholds in each specific experiment.

4.3 Results

Table 2 presents a sample from each dataset and presents a comparison between the outcomes generated by the Otsu method as a ground truth against the threshold values generated by the proposed approach. To keep Table 2 short and readable, the outcomes of only the first 10 datasets are presented in the table. However, the full details of all the experimental outcomes can be accessed via the URL linked to this paper (https://sites.google.com/view/hhocovid19/home). In Table 2, the third and fourth columns present the Otsu threshold values and the threshold values generated by the proposed approach, respectively. The fourth column presents the most frequent threshold values that appeared after the 25th execution of the algorithm. The last column provides the number of times (NOT), which is the number of times out of 25 that the most frequent threshold values appeared.

It is obvious from Table 2 that the values generated by the proposed approach are either the same or very close to the Otsu threshold values. Moreover, even in the cases where there is no complete match between the outcomes of the Otsu method and those generated by the proposed approach, the difference is negligible.

The two main inputs that are fed into the proposed approach are the group of CT images and the number of thresholds. The optimal threshold values are generated as output. The optimizer is employed to maximize the value of the between-class variance, as suggested by the Otsu criterion.

Table 3 presents the average fitness values (i.e., between-class variance) that are achieved when the proposed approach is applied to the 10 datasets. Figure 2 displays the histograms of five different images picked randomly from different datasets along with the threshold values which are represented as red vertical lines. These threshold values represent the outcome of applying the proposed approach.

5 Evaluation of results

In order to evaluate the efficiency of the proposed approach, the optimal thresholds obtained from the experiment are supplied to the evaluation process as an input. The evaluation starts, as illustrated in Fig. 3, by generating a segmented image in accordance with the optimal thresholds. The generated segmented image and the Otsu segmented image (i.e., ground truth) are then compared and tested to determine the degree of similarity.

Three different similarity metrics are used to evaluate the accuracy of the experiment: the peak signal-to-noise ratio (PSNR), the structural similarity index (SSIM), and the Jaccard index. These similarity metrics are explained in the following subsections.

5.1 Peak signal-to-noise ratio (PSNR)

The complications associated with subjective quality assessment necessitate the use of automated objective quality assessment methods to measure the quality differences between processed images. Among the well-known objective quality assessment methods is the PSNR, which is based on the mean squared error (MSE) and can be calculated as follows [51]:

where N is the number of pixels in the given image (both images must be of the same size) and \(x_{i}\), \(y_{i}\) are the pixel values in both of the images to be tested.

The PSNR can be calculated as follows:

where K is the maximum range of the image pixel values (K = 255 for grayscale images).

The PSNR and MSE are high-quality measures for testing the proposed approach. The PSNR is widely used in digital image processing applications. In this work, the PSNR gives a value of 100 dB under one condition only, i.e., when both images (to be compared) are identical, which leads to a division by 0, that is controlled by a conditional statement. When the PSNR result exceeds 30 dB, the human visual system would find it more difficult to differentiate between the two images. However, when the PSNR result is lower than 30 dB, this indicates that the human visual system would have the ability to notice the differences between the two images.

5.2 Structural similarity index (SSIM)

The SSIM is another well-known measure that can be used to assess the quality of the output generated by the proposed approach. The SSIM is based on the computation of three factors: luminance, contrast, and structure. The SSIM can be defined as follows [1]:

where \(\left( {x,y} \right) = \left( {2\mu_{x} \mu_{y} + C_{1} } \right)/(2 \mu_{x}^{2} + C_{1} )\), \(c\left( {x,y} \right) = \left( {2\sigma_{x} \sigma_{y} + C_{2} } \right)/(2 \sigma_{x}^{2} + C_{2} )\), and \(s\left( {x,y} \right) = \left( {\sigma_{xy} + C_{3} } \right)/(\sigma_{x} \sigma_{y} + C_{3} )\), where \(\mu_{x} , \mu_{y} , \sigma_{x} , \sigma_{y} ,\) and \(\sigma_{xy}\) are the local means, standard deviations, and cross-covariance of the two compared images, respectively.

If \(\alpha \, = \, \beta \, = \, \gamma \, = \, 1\) and \(C_{3} = C_{2} /2\), the equation can be simplified as follows:

The SSIM results are given in values that range between [0, 1], where 1 indicates a perfect match between the two images and 0 indicates no match.

5.3 Jaccard similarity index

The Jaccard similarity index measures the similarity of two sets of data (two images) on a range from 0 to 100%. This similarity index compares the pixels in two images to see which of them are shared and which are distinct. It counts the number of pixels that are shared by the two images, counts the total number of pixels in both images, divides the number of shared pixels by the total number of pixels, and finally multiplies the number by 100. This percentage gives the similarity between the two images. This similarity index is simple and vulnerable to small samples sizes. It can be defined as follows [15]:

5.4 Similarity results

Table 4 presents the average value of the PSNR between each segmented image (i.e., generated by the proposed approach) and its respective ground-truth image (i.e., generated by the Otsu method) that was obtained after repeating the same experiment 25 times. The table shows the average PSNR values when 2, 3, 4, and 5 thresholds are applied to segment the datasets.

The PSNR values presented in Table 4 indicate that the proposed approach is able to achieve very accurate results, where the PSNR value of 100 dB clearly indicates a high level of accuracy.

Tables 5 and 6 present the average values of the SSIM and Jaccard index, respectively. Three main observations can be made about these outcomes. First, there is a high match between the segmented images and the ground-truth images when the ratio between the matched pixels and the total number of pixels is calculated. Second, there is a general tendency by the proposed approach to achieve better results when the number of thresholds is relatively small. Third, most of the records in Tables 5 and 6 are segmented at accuracies greater than 98%, which clearly confirms the robustness of the results generated by the proposed approach.

6 Discussion and analysis

The average total time (in sec) required to process each image is shown in Table 7, from which it can be noted that the proposed approach converges in the early stages before reaching 150 iterations. As shown in the table, there is a significant difference between the total time to complete 150 iterations and the time needed to converge. This further reinforces the findings on the effectiveness and suitability of the proposed approach for medical image segmentation because it can find a fast and accurate solution in a domain where both time and accuracy are crucial factors in real-time medical applications.

As shown in Table 7, there is a positive correlation between the time to converge and the number of thresholds. Therefore, the time to converge is proportional to the number of segmented clusters. It is remarkable that the time to converge in most images is about 7–15% of the total time when the number of thresholds = 2. Moreover, even when the number of thresholds = 5, which is a case considered to require a longer time for convergence to be achieved, the time to converge in most images is about 50–55% of the total time.

Figure 4 shows the convergence plots of three sample images at different threshold values. It is evident that the convergence behavior is present in all of the images regardless of the number of thresholds.

Table 8 presents the average number of iterations at which the convergence starts for each dataset. It can be seen that the number of iterations required to converge is proportional to the number of thresholds. However, as also shown in the table, a small number of iterations can lead to the required convergence and achieve optimal results.

The main contribution of this research is to achieve the optimal results provided by Otsu’s algorithm while trying to reduce the massive number of computations it require. A set of experiments have been designed in order to compare and prove (a) the accuracy of outcomes and how they are matching the outcomes generated by Otsu, (b) the degree of similarity between the proposed approach and Otsu, and (c) the required time that the proposed approach need to reach the solution.

In Table 2, the threshold values generated by the Otsu method (i.e., the third column) are compared with the threshold values generated by the proposed approach (i.e., the fourth column). The values in the two columns are either the same or very close which proves the accuracy of outcomes generated by the proposed approach. Furthermore, Table 3 presents the average between-class variance which is an indicator to the accuracy of the outcomes. All results and comparisons are presented in details in the URL linked to this paper (https://sites.google.com/view/hhocovid19/home).

In Tables 4, 5, and 6, the degree of similarity between each segmented image (i.e., generated by the proposed approach) and its respective ground-truth image (i.e., generated by the Otsu method) is presented using PSNR, SSIM, and Jaccard index, respectively. The outcomes in these tables confirm again the accurate results that are generated by the proposed approach and how they are competitive when compared with the original method.

In order to present the required time that the proposed approach needs, Tables 7 and 8 present the average total time and the average number of iterations at which convergence starts since the original method (i.e., Otsu’s original method) follows the brute force approach in which all combinations are evaluated.

7 Conclusion

Digital image processing algorithms have been supporting the medical field over the last few decades, and a huge number of algorithms have been specifically designed to assist radiologists and specialists to identify or study the occurrence or the development of diseases. The universal transmission of COVID-19 has encouraged researchers in this domain to develop medical imaging solutions to help medical experts with decision making and diagnosis.

Image segmentation methods that are based on thresholding are considered an essential part of various domains including medical image processing. However, multilevel thresholding methods give improved segmentation results when compared to the standard single thresholding methods. Among the wide variety of available segmentation methods, the traditional standard benchmark statistical methods give optimal results at the cost of intensive computation when used for multilevel thresholding. Therefore, various metaheuristic optimization algorithms have been proposed and combined with statistical thresholding algorithms to reduce the number of required computations. In this research, the recent metaheuristic HHO algorithm was combined with the standard benchmark Otsu algorithm to perform image segmentation using multilevel image thresholding. The HHO algorithm is swarm based and has been proposed to efficiently handle continuous optimization tasks and produce high-quality solutions. The proposed approach was tested on a publicly available imaging dataset that contains chest images with clinical and genomic correlates and represents a rural COVID-19-positive population (COVID-19-AR).

Three well-known similarity metrics (PSNR, SSIM, and Jaccard index) were used to evaluate the accuracy of the results generated by the proposed approach against those produced by the Otsu method. After running the experiments 25 times, the average value of the PSNR was around 97%, 88%, 76%, and 76% when the number of thresholds was 2, 3, 4, and 5, respectively. The average value of the SSIM showed an overlap match of 99.9%, 99.7%, 97.9%, and 97.2% for 2, 3, 4, and 5 thresholds, respectively, and the Jaccard index showed a similarity of 99.9%, 99.6%, 95.4%, and 93.2% for the same number of thresholds. These results indicate that the proposed approach can achieve a massive reduction in the computational cost and simultaneously match the quality of images produced by the Otsu method for the same threshold values. It is worth mentioning here that the number of iterations in the experiment was limited to 150 due to the limitation of time. Thus, we envisage that forcing the algorithm to iterate a larger number of times will lead to better outcomes in terms of the degree of matching quality.

References

Abdel-Basset M, Mohamed R, Elhoseny M, Chakrabortty RK, Ryan M (2020) A hybrid COVID-19 detection model using an improved marine predators algorithm and a ranking-based diversity reduction strategy. IEEE Access 8:79521–79540

Ahmadi M, Kazemi K, Aarabi A, Niknam T, Helfroush MS (2019) Image segmentation using multilevel thresholding based on modified bird mating optimization. Multimed Tools Appl 78(16):23003–23027. https://doi.org/10.1007/s11042-019-7515-6

Akram S, Javed MY, Akram MU, Qamar U, Hassan A (2016) Pulmonary nodules detection and classification using hybrid features from computerized tomographic images. J Med Imaging Health Inform 6(1):252–259. https://doi.org/10.1166/jmihi.2016.1600

Baby Resma KP, Nair MS (2018) Multilevel thresholding for image segmentation using krill herd optimization algorithm. J King Saud Univ Comput Inf Sci. https://doi.org/10.1016/j.jksuci.2018.04.007

Chen H, Heidari AA, Zhao X, Zhang L, Chen H (2020) Advanced orthogonal learning-driven multi-swarm sine cosine optimization: framework and case studies. Expert Syst Appl 144:113113. https://doi.org/10.1016/j.eswa.2019.113113

Chen H, Li S, Heidari AA, Wang P, Li J, Yang Y, Wang M, Huang C (2020) Efficient multi-population outpost fruit fly-driven optimizers: framework and advances in support vector machines. Expert Syst Appl 142:112999. https://doi.org/10.1016/j.eswa.2019.112999

Chen H, Yang C, Heidari AA, Zhao X (2020) An efficient double adaptive random spare reinforced whale optimization algorithm. Expert Syst Appl 154:113018. https://doi.org/10.1016/j.eswa.2019.113018

da Silva Sousa JRF, Silva AC, de Paiva AC, Nunes RA (2010) Methodology for automatic detection of lung nodules in computerized tomography images. Comput Methods Programs Biomed 98(1):1–14. https://doi.org/10.1016/j.cmpb.2009.07.006

Desai S, Baghal A, Wongsurawat T, Jenjaroenpun P, Powell T, Al-Shukri S, Gates K, Farmer P, Rutherford M, Blake G, Nolan T (2020) Chest imaging representing a COVID-19 positive rural U.S. population. Sci Data 7(1):414. https://doi.org/10.1038/s41597-020-00741-6

Desai S, Baghal A, Wongsurawat T, Al-Shukri S, Gates K, Farmer P, Rutherford M, Blake GD, Nolan T, Powell T, Sexton K, Bennett W, Prior F (2020) Data from chest imaging with clinical and genomic correlates representing a rural COVID-19 positive population data set. The Cancer Imaging Archive

Desai S, Baghal A, Wongsurawat T, Al-Shukri S, Gates K, Farmer P, Rutherford M, Blake GD, Nolan T, Powell T, Sexton K, Bennett W, Prior F (2020) Chest imaging with clinical and genomic correlates representing a rural COVID-19 positive population

Dorgham O, Fisher M, Laycock S (2009) Performance of a 2D–3D image registration system using (lossy) compressed x-ray CT. Ann BMVA 3:1–11

Dorgham O, Ryalat MH, Naser MA (2020) Automatic body segmentation for accelerated rendering of digitally reconstructed radiograph images. Inform Med Unlocked 20:100375. https://doi.org/10.1016/j.imu.2020.100375

Dorgham OM, Alweshah M, Ryalat MH, Alshaer J, Khader M, Alkhalaileh S (2021) Monarch butterfly optimization algorithm for computed tomography image segmentation. Multimed Tools Appl. https://doi.org/10.1007/s11042-020-10147-6

Eelbode T, Bertels J, Berman M, Vandermeulen D, Maes F, Bisschops R, Blaschko MB (2020) Optimization for medical image segmentation: theory and practice when evaluating with dice score or jaccard index. IEEE Trans Med Imaging 39(11):3679–3690

Elaziz MA, Lu S (2019) Many-objectives multilevel thresholding image segmentation using knee evolutionary algorithm. Expert Syst Appl 125:305–316. https://doi.org/10.1016/j.eswa.2019.01.075

Elaziz MA, Oliva D, Ewees AA, Xiong S (2019) Multi-level thresholding-based grey scale image segmentation using multi-objective multi-verse optimizer. Expert Syst Appl 125:112–129. https://doi.org/10.1016/j.eswa.2019.01.047

Fisher M, Dorgham O, Laycock SD (2013) Fast reconstructed radiographs from octree-compressed volumetric data. Int J Comput Assist Radiol Surg 8(2):313–322. https://doi.org/10.1007/s11548-012-0783-5

Furqan S, Gulistan R, Alejandro FF (2019) Computer-aided detection of lung nodules: a review. J Med Imaging 6(2):1–11. https://doi.org/10.1117/1.JMI.6.2.020901

Gezici H, Livatyalı H (2022) Chaotic Harris hawks optimization algorithm. J Comput Des Eng 9(1):216–245. https://doi.org/10.1093/jcde/qwab082

Gruetzemacher R, Gupta A, Paradice D (2018) 3D deep learning for detecting pulmonary nodules in CT scans. J Am Med Inform Assoc 25(10):1301–1310. https://doi.org/10.1093/jamia/ocy098

Heidari AA, Mirjalili S, Faris H, Aljarah I, Mafarja M, Chen H (2019) Harris hawks optimization: algorithm and applications. Futur Gener Comput Syst 97:849–872. https://doi.org/10.1016/j.future.2019.02.028

Jiang H, Ma H, Qian W, Gao M, Li Y (2018) An automatic detection system of lung nodule based on multigroup patch-based deep learning network. IEEE J Biomed Health Inform 22(4):1227–1237. https://doi.org/10.1109/JBHI.2017.2725903

Jo HH, Hong H, Mo Goo J (2014) Pulmonary nodule registration in serial CT scans using global rib matching and nodule template matching. Comput Biol Med 45:87–97. https://doi.org/10.1016/j.compbiomed.2013.10.028

Kapur JN, Sahoo PK, Wong AKC (1985) A new method for gray-level picture thresholding using the entropy of the histogram. Comput Vis Graph Image Process 29(3):273–285. https://doi.org/10.1016/0734-189X(85)90125-2

Keshani M, Azimifar Z, Tajeripour F, Boostani R (2013) Lung nodule segmentation and recognition using SVM classifier and active contour modeling: a complete intelligent system. Comput Biol Med 43(4):287–300. https://doi.org/10.1016/j.compbiomed.2012.12.004

Kumar AS, Kumar A, Bajaj V, Singh, GK (2018) Fractional-order darwinian swarm intelligence inspired multilevel thresholding for mammogram segmentation. Paper presented at the 2018 international conference on communication and signal processing (ICCSP)

Li Z, Sun Y, Zhang L, Tang J (2021) CTNet: context-based tandem network for semantic segmentation. IEEE Trans Pattern Anal Mach Intell. https://doi.org/10.1109/TPAMI.2021.3132068

Liang H, Jia H, Xing Z, Ma J, Peng X (2019) Modified grasshopper algorithm-based multilevel thresholding for color image segmentation. IEEE Access 7:11258–11295. https://doi.org/10.1109/ACCESS.2019.2891673

Shapiro LG, Stockman GC (2001) Computer vision, 1st edn. Prentice Hall, Hoboken

Magalhães Barros Netto S, Corrêa Silva A, Acatauassú Nunes R, Gattass M (2012) Automatic segmentation of lung nodules with growing neural gas and support vector machine. Comput Biol Med 42(11):1110–1121. https://doi.org/10.1016/j.compbiomed.2012.09.003

Messay T, Hardie RC, Rogers SK (2010) A new computationally efficient CAD system for pulmonary nodule detection in CT imagery. Med Image Anal 14(3):390–406. https://doi.org/10.1016/j.media.2010.02.004

Mirjalili S, Gandomi AH, Mirjalili SZ, Saremi S, Faris H, Mirjalili SM (2017) Salp swarm algorithm: a bio-inspired optimizer for engineering design problems. Adv Eng Softw 114:163–191. https://doi.org/10.1016/j.advengsoft.2017.07.002

Mirjalili S, Lewis A (2016) The whale optimization algorithm. Adv Eng Softw 95:51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008

Mirjalili S, Mirjalili SM, Lewis A (2014) Grey wolf optimizer. Adv Eng Softw 69:46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007

Mousavirad SJ, Ebrahimpour-Komleh H (2020) Human mental search-based multilevel thresholding for image segmentation. Appl Soft Comput 97:105427. https://doi.org/10.1016/j.asoc.2019.04.002

Narayanan BN, Hardie RC, Kebede TM, Sprague MJ (2019) Optimized feature selection-based clustering approach for computer-aided detection of lung nodules in different modalities. Pattern Anal Appl 22(2):559–571. https://doi.org/10.1007/s10044-017-0653-4

Oliva D, Hinojosa S, Osuna-Enciso V, Cuevas E, Pérez-Cisneros M, Sanchez-Ante G (2019) Image segmentation by minimum cross entropy using evolutionary methods. Soft Comput 23(2):431–450. https://doi.org/10.1007/s00500-017-2794-1

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9(1):62–66

Pare S, Kumar A, Singh GK, Bajaj V (2020) Image segmentation using multilevel thresholding: a research review. Iran J Sci Technol Trans Electr Eng 44(1):1–29. https://doi.org/10.1007/s40998-019-00251-1

Pare S, Bhandar AK, Kumar A, Singh GK (2019) Rényi’s entropy and bat algorithm based color image multilevel thresholding. In: Machine intelligence and signal analysis. Advances in intelligent systems and computing, pp 71–84, Springer, Singapore

Pehrson LM, Nielsen MB, Ammitzbøl Lauridsen C (2019) Automatic pulmonary nodule detection applying deep learning or machine learning algorithms to the LIDC-IDRI database: a systematic review. Diagnostics. https://doi.org/10.3390/diagnostics9010029

Pu J, Zheng B, Leader JK, Wang X-H, Gur D (2008) An automated CT based lung nodule detection scheme using geometric analysis of signed distance field. Med Phys 35(8):3453–3461. https://doi.org/10.1118/1.2948349

Ryalat MH, Laycock S, Fisher M (2017) Automatic removal of mechanical fixations from ct imagery with particle swarm optimisation. Paper presented at the bioinformatics and biomedical engineering, Cham

Ryalat MH, Laycock S, Fisher M (2017) A fast and automatic approach for removing artefacts due to immobilisation masks in X-ray CT. Paper presented at the 2017 IEEE EMBS international conference on biomedical and health informatics (BHI). IEEE, New York

Saremi S, Mirjalili S, Lewis A (2017) Grasshopper optimisation algorithm: theory and application. Adv Eng Softw 105:30–47. https://doi.org/10.1016/j.advengsoft.2017.01.004

Serhat Ozekes OO, Ucan ON (2007) Nodule detection in a lung region that’s segmented with using genetic cellular neural networks and 3D template matching with fuzzy rule based thresholding. Korean J Radiol 9(1):1–9

Sharma A, Kumar S, Singh SN (2019) Brain tumor segmentation using DE embedded OTSU method and neural network. Multidimens Syst Signal Process 30(3):1263–1291. https://doi.org/10.1007/s11045-018-0603-3

Tan M, Deklerck R, Jansen B, Bister M, Cornelis J (2011) A novel computer-aided lung nodule detection system for CT images. Med Phys 38(10):5630–5645. https://doi.org/10.1118/1.3633941

Tarkhaneh O, Shen H (2019) An adaptive differential evolution algorithm to optimal multi-level thresholding for MRI brain image segmentation. Expert Syst Appl 138:112820. https://doi.org/10.1016/j.eswa.2019.07.037

Tedmori S, Al-Najdawi N (2012) Lossless image cryptography algorithm based on discrete cosine transform. Int Arab J Inf Technol 9(5):471–478

Upadhyay P, Chhabra JK (2020) Kapur’s entropy based optimal multilevel image segmentation using crow search algorithm. Appl Soft Comput 97:105522. https://doi.org/10.1016/j.asoc.2019.105522

Zhang G, Jiang S, Yang Z, Gong L, Ma X, Zhou Z, BaoLiu CQ (2018) Automatic nodule detection for lung cancer in CT images: a review. Comput Biol Med 103:287–300. https://doi.org/10.1016/j.compbiomed.2018.10.033

Zhou C, Tian L, Zhao H, Zhao K (2015) A method of two-dimensional otsu image threshold segmentation based on improved firefly algorithm. Paper presented at the 2015 IEEE international conference on cyber technology in automation, control, and intelligent systems (CYBER). IEEE, New York

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Data availability

Publication of the annotated images database is available through this public repository (https://sites.google.com/view/hhocovid19/data-set).

Code availability

The source code is available at this public repository (https://sites.google.com/view/hhocovid19/source-code).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ryalat, M.H., Dorgham, O., Tedmori, S. et al. Harris hawks optimization for COVID-19 diagnosis based on multi-threshold image segmentation. Neural Comput & Applic 35, 6855–6873 (2023). https://doi.org/10.1007/s00521-022-08078-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-08078-4